Hey everyone ,

I am trying to use a local llm while using mcp servers in crew ai

i can see in verbose log that llm is able to identify correct tool and able to run it and receive output from tool but after that step it throws an APIConnectionError

Here is my code snippet

from crewai import Agent, Task, Crew, LLM, Process

from crewai_tools import MCPServerAdapter

from mcp import StdioServerParameters

import os

from crewai import LLM

server_params=StdioServerParameters(

command="uv", # Or your python3 executable i.e. "python3"

args=["run", "/home/load_testing_stdio/server.py"],

)

with MCPServerAdapter(server_params) as tools:

print(f"Available tools from Stdio MCP server: {[tool.name for tool in tools]}")

my_llm = LLM(

model="ollama/llama3.2",

base_url="http://localhost:11434",

streaming=True

)

# Example: Using the tools from the Stdio MCP server in a CrewAI Agent

agent = Agent(

role="Hash Calculator",

goal="compute hash of a given string",

backstory="You are a hash calculator, you compute hash of a given string",

tools=tools,

verbose=True,

allow_delegation=False,

llm=my_llm,

)

task = Task(

description="Compute hash for the string 'hello world'",

expected_output="return hash of specified string.",

agent=agent,

)

crew = Crew(

agents=[agent],

tasks=[task],

verbose=True,

process=Process.sequential,

)

result = crew.kickoff()

# print(result)

Here is the console output

/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/pydantic/fields.py:1093: PydanticDeprecatedSince20: Using extra keyword arguments on `Field` is deprecated and will be removed. Use `json_schema_extra` instead. (Extra keys: 'required'). Deprecated in Pydantic V2.0 to be removed in V3.0. See Pydantic V2 Migration Guide at https://errors.pydantic.dev/2.11/migration/

warn(

[06/30/25 11:13:16] INFO Processing request of type ListToolsRequest server.py:619

INFO Processing request of type ListToolsRequest server.py:619

/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/pydantic/fields.py:1093: PydanticDeprecatedSince20: Using extra keyword arguments on `Field` is deprecated and will be removed. Use `json_schema_extra` instead. (Extra keys: 'items', 'anyOf', 'enum', 'properties'). Deprecated in Pydantic V2.0 to be removed in V3.0. See Pydantic V2 Migration Guide at https://errors.pydantic.dev/2.11/migration/

warn(

Available tools from Stdio MCP server: ['generate_md5_hash', 'count_characters', 'get_first_half']

╭─────────────────────────────────────────────────────────────────── Crew Execution Started ────────────────────────────────────────────────────────────────────╮

│ │

│ Crew Execution Started │

│ Name: crew │

│ ID: c6e83991-152f-43ff-a696-8c02b5ccbe8e │

│ Tool Args: │

│ │

│ │

╰───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

🚀 Crew: crew

└── 📋 Task: 722ec58f-28ff-4a12-bcaa-c6055bfaf11d

Status: Executing Task...

╭────────────────────────────────────────────────────────────────────── 🤖 Agent Started ───────────────────────────────────────────────────────────────────────╮

│ │

│ Agent: Hash Calculator │

│ │

│ Task: Compute hash for the string 'hello world' │

│ │

╰───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

🚀 Crew: crew

└── 📋 Task: 722ec58f-28ff-4a12-bcaa-c6055bfaf11d

Status: Executing Task...

🚀 Crew: crew

└── 📋 Task: 722ec58f-28ff-4a12-bcaa-c6055bfaf11d

Status: Executing Task...

└── 🔧 Used generate_md5_hash (1)

╭─────────────────────────────────────────────────────────────────── 🔧 Agent Tool Execution ───────────────────────────────────────────────────────────────────╮

│ │

│ Agent: Hash Calculator │

│ │

│ Thought: Thought: I need to compute the hash of the input string 'hello world' │

│ │

│ Using Tool: generate_md5_hash │

│ │

╰───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

╭───────────────────────────────────────────────────────────────────────── Tool Input ──────────────────────────────────────────────────────────────────────────╮

│ │

│ "{\"input_str\": \"hello world\"}" │

│ │

╰───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

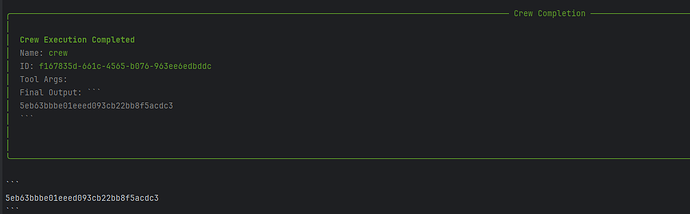

╭───────────────────────────────────────────────────────────────────────── Tool Output ─────────────────────────────────────────────────────────────────────────╮

│ │

│ 5eb63bbbe01eeed093cb22bb8f5acdc3 │

│ │

╰───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

🚀 Crew: crew

└── 📋 Task: 722ec58f-28ff-4a12-bcaa-c6055bfaf11d

Status: Executing Task...

├── 🔧 Used generate_md5_hash (1)

└── ❌ LLM Failed

An unknown error occurred. Please check the details below.

🚀 Crew: crew

└── 📋 Task: 722ec58f-28ff-4a12-bcaa-c6055bfaf11d

Assigned to: Hash Calculator

Status: ❌ Failed

├── 🔧 Used generate_md5_hash (1)

└── ❌ LLM Failed

╭──────────────────────────────────────────────────────────────────────── Task Failure ─────────────────────────────────────────────────────────────────────────╮

│ │

│ Task Failed │

│ Name: 722ec58f-28ff-4a12-bcaa-c6055bfaf11d │

│ Agent: Hash Calculator │

│ Tool Args: │

│ │

│ │

╰───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

╭──────────────────────────────────────────────────────────────────────── Crew Failure ─────────────────────────────────────────────────────────────────────────╮

│ │

│ Crew Execution Failed │

│ Name: crew │

│ ID: c6e83991-152f-43ff-a696-8c02b5ccbe8e │

│ Tool Args: │

│ Final Output: │

│ │

│ │

╰───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

Traceback (most recent call last):

response = base_llm_http_handler.completion(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

data = provider_config.transform_request(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

modified_prompt = ollama_pt(model=model, messages=messages)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

tool_calls = messages[msg_i].get("tool_calls")

~~~~~~~~^^^^^^^

IndexError: list index out of range

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/smrati/smrati_linux_subsystem/learning/weather/crewai_mcp_server_integration/stdio_integration.py", line 44, in <module>

result = crew.kickoff()

^^^^^^^^^^^^^^

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/crew.py", line 659, in kickoff

result = self._run_sequential_process()

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/crew.py", line 768, in _run_sequential_process

return self._execute_tasks(self.tasks)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/crew.py", line 871, in _execute_tasks

task_output = task.execute_sync(

^^^^^^^^^^^^^^^^^^

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/task.py", line 354, in execute_sync

return self._execute_core(agent, context, tools)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/task.py", line 502, in _execute_core

raise e # Re-raise the exception after emitting the event

^^^^^^^

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/task.py", line 418, in _execute_core

result = agent.execute_task(

^^^^^^^^^^^^^^^^^^^

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/agent.py", line 435, in execute_task

raise e

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/agent.py", line 411, in execute_task

result = self._execute_without_timeout(task_prompt, task)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/agent.py", line 507, in _execute_without_timeout

return self.agent_executor.invoke(

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/agents/crew_agent_executor.py", line 125, in invoke

raise e

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/agents/crew_agent_executor.py", line 114, in invoke

formatted_answer = self._invoke_loop()

^^^^^^^^^^^^^^^^^^^

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/agents/crew_agent_executor.py", line 210, in _invoke_loop

raise e

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/agents/crew_agent_executor.py", line 157, in _invoke_loop

answer = get_llm_response(

^^^^^^^^^^^^^^^^^

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/utilities/agent_utils.py", line 160, in get_llm_response

raise e

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/utilities/agent_utils.py", line 151, in get_llm_response

answer = llm.call(

^^^^^^^^^

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/llm.py", line 956, in call

return self._handle_non_streaming_response(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/smrati/smrati_linux_subsystem/learning/weather/.venv/lib/python3.11/site-packages/crewai/llm.py", line 768, in _handle_non_streaming_response

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

raise e

result = original_function(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

raise exception_type(

^^^^^^^^^^^^^^^

raise e

raise APIConnectionError(

.APIConnectionError:

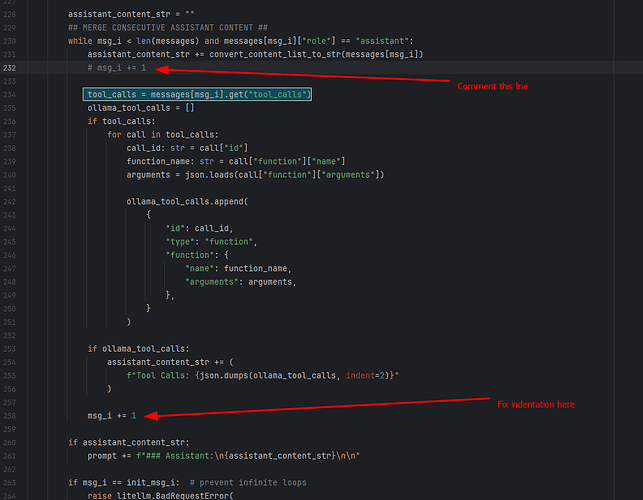

as you can see llm is able to fetch tools from mcp server, capture output from tool but after that crewai throws an error