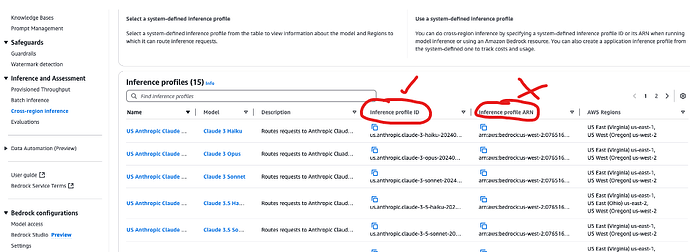

Hey all, I’m using CrewAI with Bedrock and I’m running into problems using some models. Everything works fine if I set MODEL = bedrock/anthropic.claude-3-sonnet-20240229-v1:0, but when I try to use MODEL = bedrock/anthropic.claude-3-7-sonnet-20250219-v1:0 I get an error. The error indicates that on-demand throughput isn’t supported for this model, and the AWS docs say that in this case you need to create an inference profile and supply the ARN of the inference profile in place of the model id. I’ve done that, but now I’m getting the following error:

raise exception_type(

model, custom_llm_provider, dynamic_api_key, api_base = get_llm_provider(

^^^^^^^^^^^^^^^^^

raise e

.BadRequestError:

An error occurred while running the crew: Command '['uv', 'run', 'run_crew']' returned non-zero exit status 1.

When looking through the CrewAI docs specific to Bedrock I also see that these models aren’t listed. Does Crew simply not support these model types that require an inference profile ARN? Or am I screwing something up? Thanks in advance!

Cheers!